Generative AI’s Biggest Danger Isn’t the Tech - It’s the Lack of Policy

Interview with Anthony DeSimone, Owner of You’re the Expert Now LLC and Generative AI Specialist

Anthony DeSimone has worked with hundreds of organizations across industries, helping leaders safely adopt generative AI while avoiding pitfalls and risks. In this interview, DeSimone shares why a formal AI usage policy is essential and outlines the structure every company should follow. Each “Tip” comes directly from his experience guiding companies through their first generative AI usage policies.

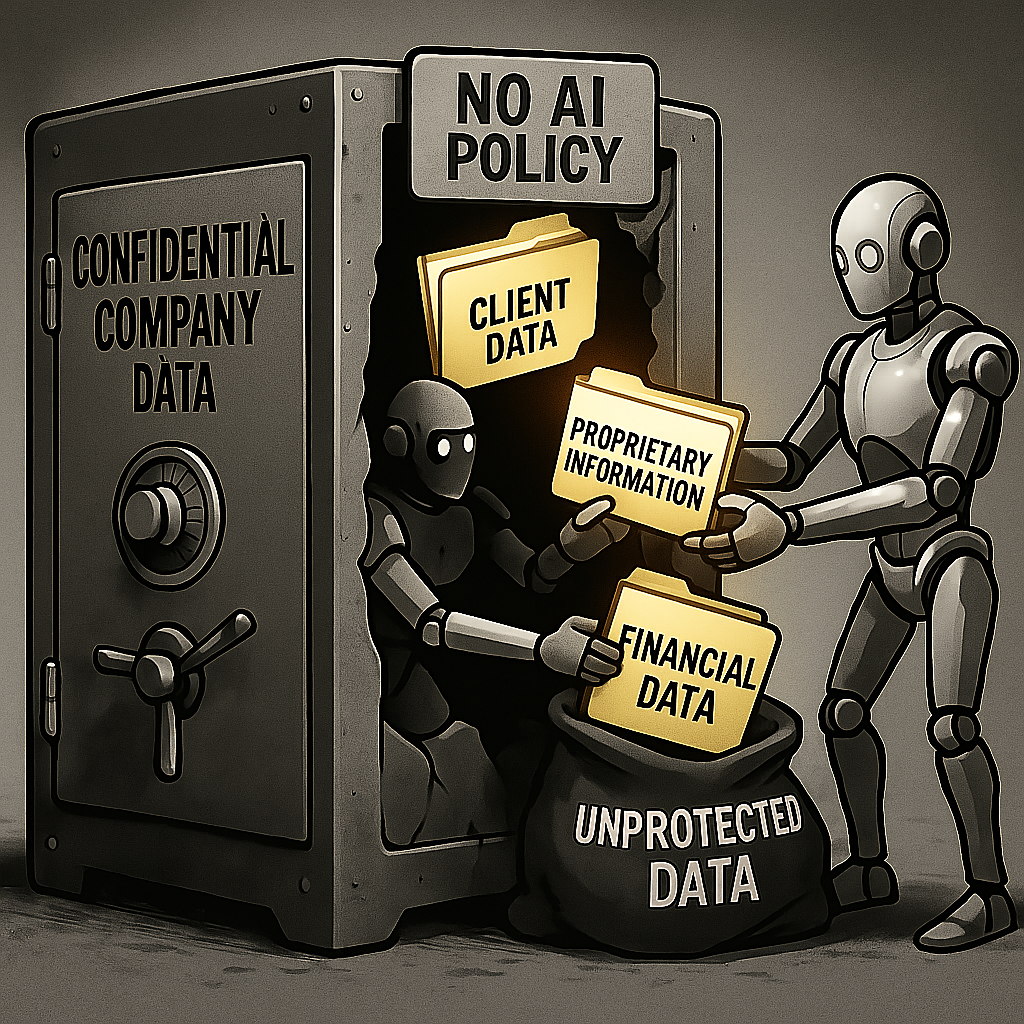

The Growing Risk of Shadow AI

Surveys show roughly 42% of employees are using generative AI tools like ChatGPT at work , often without informing leadership (known as shadow AI).

“This shadow use is where the biggest risks come from,” DeSimone explains. “If leaders don’t provide guardrails, employees will make their own rules.”

A 2024 study by Harmonic Security revealed that over 4% of prompts and more than 20% of uploaded files to AI platforms contained sensitive corporate data. According to DeSimone, “These aren’t rare slipups. They’re a clear warning sign that companies must act.”

Real-World Examples

Stories of employees mishandling generative AI are surfacing across nearly every industry, and they nearly all point back to the same root cause: a lack of training and clear guidance.

In 2023, Samsung engineers inadvertently uploaded proprietary source code and confidential internal documents into ChatGPT, exposing sensitive intellectual property and prompting the company to impose a blanket ban on generative AI tools.

At Morgan & Morgan, three attorneys in the Wyoming office were sanctioned in February 2025 after submitting a court motion that cited eight fabricated cases produced by AI. The firm responded by tightening verification protocols and mandating additional training for all attorneys.

At a healthcare provider, a staff member used a free AI writing assistant to summarize patient records, not realizing the data was being stored on external servers—potentially violating HIPAA regulations.

In marketing, an employee at a consumer goods company fed competitor research into an AI tool, inadvertently disclosing trade secrets and causing reputational damage when the information appeared in outputs shared externally.

In HR, a recruiter relied on AI to screen resumes and generated biased scoring recommendations that disadvantaged female applicants, putting the company at risk of regulatory scrutiny.

“These stories repeat themselves across industries,” DeSimone says. “What they all have in common is the absence of a clear, enforceable policy.”

What a Robust AI Usage Policy Needs to Include

“A strong AI usage policy does two things,” DeSimone explains. “It protects the organization from risk, and it empowers employees to use AI responsibly and effectively.” Below are the essential components:

1. Definitions

The policy should start with clear definitions to avoid confusion. These definitions create a shared language across the organization, ensuring that employees, managers, and leadership interpret critical terms consistently. Without this foundation, even well‑intentioned policies can lead to misunderstandings, compliance gaps, or uneven enforcement. Examples include: Generative AI, Confidential Data, Proprietary Information, Approved Tools, Hallucinations, Shadow AI, Large Language Model (LLM), Prompt, Training Data, Token, Bias, Data Privacy.

Tip (DeSimone): “These are just starting points. Customize the list to your organization. The goal is alignment—everyone needs to be speaking the same language.”

2. Approved Tool List (Overview)

Organizations should clearly state that employees may only use tools listed on the Approved Tool List. For teams new to AI or with limited training, Anthony emphasizes caution: only add tools with strong security and encryption safeguards, so leadership can be confident no confidential information is exposed through systems that train on user data.

Tip (DeSimone): “If your team is new to AI, or if you’re handling highly confidential information, keep it simple. Only allow tools approved for confidential data use. If it’s not on the list, it’s off limits.”

3. IT Governance: Security and Encryption Standards for Safe AI Use

IT must clearly define the level of security and encryption standards that generative AI tools must meet in order to be considered safe for handling confidential and proprietary information. This includes ensuring the tool does not train on or retain sensitive company data. Without these requirements, the organization risks exposing critical information and will be unable to safely expand its list of approved tools.

Tip (DeSimone): “Without clear security and encryption guardrails, employees may wrongly assume that any system is safe. Establishing these standards upfront protects sensitive data, reduces risk, and gives the organization confidence to responsibly evaluate and adopt new AI tools.”

4. New Tool Approval Process

Employees will inevitably discover new AI tools, and without a clear approval process this can create serious risks. Building on the standards outlined in #3, organizations should establish a workflow that explains exactly how a new tool will be reviewed and either approved or denied. This process ensures enthusiasm for innovation is balanced with safeguards that protect confidential information, maintain compliance, and prevent shadow AI.

Submission: Employee provides tool name, purpose, and use case.

Evaluation: IT reviews security, Legal reviews compliance.

Decision: Tool is approved, restricted, or denied.

Communication: Documented and added (or excluded) from appendix.

Tip (DeSimone): “By documenting a process, you make it clear to employees that there is a safe path for introducing new tools. This openness shows the organization supports innovation responsibly. Making the process visible reduces the chances that employees bring in unapproved tools, helps prevent shadow AI, and builds trust.”

5. Training and Accountability

Training should cover a wide range of topics, and it must be emphasized that this training is not a one‑time exercise. Because generative AI is evolving rapidly, with new tools emerging every week and AI features being integrated into the software employees already use, ongoing education is essential.

Training should cover:

What data is safe.

The risks and capabilities of generative AI.

How to use approved tools.

How to verify output.

Red flags for misuse.

Employee sign-off, with managers enforcing compliance.

Tip (DeSimone): “Training must be ongoing. Teach both features and risks. Pair that with accountability so employees know rules apply to them.”

6. Risks of Using Generative AI

Common risks include issues that can impact both the organization and employees. These risks are not theoretical—they are already being seen across industries, and each represents a challenge companies must address in their AI usage policies. Among the most important risks are:

Confidentiality Breach

Hallucinations and Inaccuracy

Bias and Fairness Issues

Over-Reliance

Legal Violations

Reputation Damage

Shadow AI

Tip (DeSimone): “Many of the risks described above can be significantly reduced if humans remain actively involved throughout the process. Generative AI tools are not end-to-end products and must always be paired with human oversight. People must frame the prompt at the beginning to ensure the AI is being asked the right question, and they must carefully review the output at the end to catch errors, bias, or hallucinations. When used this way, AI strengthens human decision‑making instead of replacing it. I call this the ‘People First, People Last’ philosophy.”

7. Consequences of Non-Compliance

Make consequences clear, while also emphasizing that generative AI is an important tool for productivity and innovation. The goal is not to make policies so restrictive that employees avoid using AI, but to set reasonable boundaries that protect the organization and encourage responsible adoption:

First offense: retraining and written notice.

Repeated offenses: suspension of access.

Severe misuse: up to termination.

Tip (DeSimone): “Consequences should be fair. Early violations focus on education. Repeated or intentional ones require stronger action.”

Appendix: Approved Tool List

The appendix is a living record of approved tools. With new generative AI tools launching almost weekly and many being integrated into existing software, this section must be actively maintained and updated regularly. Treat it as an evolving resource that reflects the organization’s most current security and compliance standards. Include:

Tool Name (e.g., ChatGPT, Gemini, Copilot).

Model/Version (e.g., GPT-4, Gemini 1.5 Pro).

Plan Type (e.g., Enterprise).

Confidential Use Allowed? Yes/No.

Approved Use Cases (e.g., marketing, research).

Tip (DeSimone): “Be specific about the tools and plan types. Don’t just say ‘ChatGPT’—say ChatGPT Enterprise. Otherwise employees may assume the free version is okay.”

Conclusion

Generative AI is no longer optional for organizations. It is already reshaping the way employees work, whether leadership is ready or not. The choice is simple: either let employees experiment without guidance and accept the risks, or create a clear policy that safeguards the company while empowering teams to use AI effectively. As Anthony DeSimone emphasizes, the goal is not to restrict innovation but to direct it. A strong usage policy ensures that AI becomes a competitive advantage rather than a liability, giving organizations the structure, confidence, and accountability needed to thrive in this new era.

DeSimone cautions: “If leaders do not act now, employees will eventually put confidential information into these tools, and the organization will not realize it until the damage is done.”

Summary

In this interview, Anthony DeSimone, owner of You’re the Expert Now LLC, explains why every organization needs a formal generative AI usage policy and outlines the essential components it must include. With nearly half of employees already using AI tools like ChatGPT at work, often without leadership’s knowledge, companies face growing risks of “shadow AI.” Real-world examples, from Samsung engineers uploading proprietary code to attorneys sanctioned for citing fabricated cases, show how easily misuse can cause reputational, legal, and financial harm. DeSimone emphasizes that a strong policy should define key terms, establish an approved tool list, set security and encryption standards, and provide a clear process for reviewing new tools. Ongoing training and accountability are critical, since AI technology evolves rapidly and misuse often stems from a lack of awareness. He also stresses that generative AI tools must always be paired with human oversight, following his “People First, People Last” philosophy. The ultimate goal is not to stifle innovation but to enable it safely. As DeSimone warns, without clear policies, employees will eventually put confidential information into AI tools, and companies may not discover the damage until it is too late.

frequently asked questions (faqs)

-

Because employees already use AI at work, often without approval. Without guardrails, this creates confidentiality, compliance, and reputational risks.

-

Shadow AI is when employees use AI tools without company approval or oversight.

-

By approving tools, training employees, setting IT guardrails, and updating policies regularly.

-

Data breaches, hallucinations, bias, legal violations, and loss of trust.

-

Consequences may include retraining, suspension of access, or termination depending on severity.

-

At least monthly, since AI products evolve quickly.

-

It means humans must frame the input and validate the output. AI is a support tool, not a replacement for judgement.